Right up front Vivante states that it designed its GPU architecture to scale to compete with Nvidia and ATI. It plans to vie with Nvidia in the next generation of ultra-mobile GPU in GTX/Maxwell, John Oram writes from San Francisco.

Right up front Vivante states that it designed its GPU architecture to scale to compete with Nvidia and ATI. It plans to vie with Nvidia in the next generation of ultra-mobile GPU in GTX/Maxwell, John Oram writes from San Francisco.

A fledgling start up once assisted by semiconductor angel investors and corporate investment from Fujitsu, Vivante was profitable five years after opening its doors. It is now headquartered in Sunnyvale, California with offices in Shanghai and Chengdu China. Over its nine year history, Vivante Corporation has infiltrated many markets.

The company flaunts its “firsts” – first to ship OpenGLES 3.0 silicon and first to ship embedded OpenCL 1.1 silicon. It has shipped over 120 million units. Currently, Vivante is inside the majority of the top players in the fields of SoC vendors, mobile OEMs, TV OEMs, and automotive OEMs.

At IDF, Vivante was heralding its advantage over its competitors referring to benchmark ratings in its slides. For example see the GC1000 – Mali 400-MP2 comparison where it also pictorially point out the difference in size between the Mali and smaller Vivante product.

Smart TVs, such as Vizio, LG U+, Lenevo, TCL, Hisense, and Changhong, rely on Vivante. Chromecast Internet to TV streaming experiences Acceleration by Vivante in 3D gaming, composition, and user interface. Set top boxes from Toshiba out of Japan, and three companies out of Shenzhen, China, Huawei, Himedia,and GIEC, all use Vivante’s GPU Acceleration.

Tomorrow’s cars will never be the same. Vivante is everywhere. Drivers will check out their positioning with ADAS (Advanced Driver Assistance Systems) displays, reverse guidance, pedestrian detection, and object distance indicators. In fact, Vivante was awarded the 2013 Frost & Sullivan Best Practices Award for Advanced Driver Assistance Systems.

Vivante used IDF to announce Vega. Vega is the culmination of seven years of architecture refinements and the experience of more than 100 SoC integrations. It is optimized to balance the big three: performance, power, and area. GPU delivers highest in class performance at greater than 1 GHz GPU clock speeds. It even touts patented logarithmic space full precision math units. Vega is optimized and configured from production GPU cores GC2000, GC4000, and GC5000. Vega GPUs have been delivered to lead customers for tapeout.

Vivante’s SDK is ready for GUI, gaming, and navigation applications. Vivante provides full API support across the GPU product line, OpenGL ES 3.0, OpenCL 1.2, and DirectX 11 9_3. The company prides itself on its Scalable Ultra-threaded Unified Shader which offers up to 32x SIMD Vec-4 shaders and up to 256 independent threads per shader operate on discrete data in parallel. Shaders facilitate creation of an endless range of effects by tweaking hue, brightness, contrast and saturation of the pixels, vertices and textures to create an image. Shaders provide a programmable alternative to the hard-coded approach known as Fixed Function Pipeline.

Vivante isn’t shy about pointing out its edge over the competition. As far as performance / area advantages, they are taking on Tegra, Adreno, Mali, and IMG.

In conclusion, Vivante indicated that it isn’t overlooking the mass market either with their Vega Lite version which still promises the smallest silicon area matched with extremely low power.

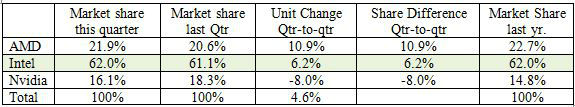

The troubled chipmaker AMD is about to fight back against Nvidia dominence with its upcoming AMD Radeon R9 300-series graphics cards.

The troubled chipmaker AMD is about to fight back against Nvidia dominence with its upcoming AMD Radeon R9 300-series graphics cards.